In-memory caching and in-memory data storage are both techniques used to improve the performance of applications by storing frequently accessed data in memory. However, they differ in their approach and purpose.

Image is subject to copyright!

In-memory caching and in-memory data storage are both techniques used to improve the performance of applications by storing frequently accessed data in memory. However, they differ in their approach and purpose.

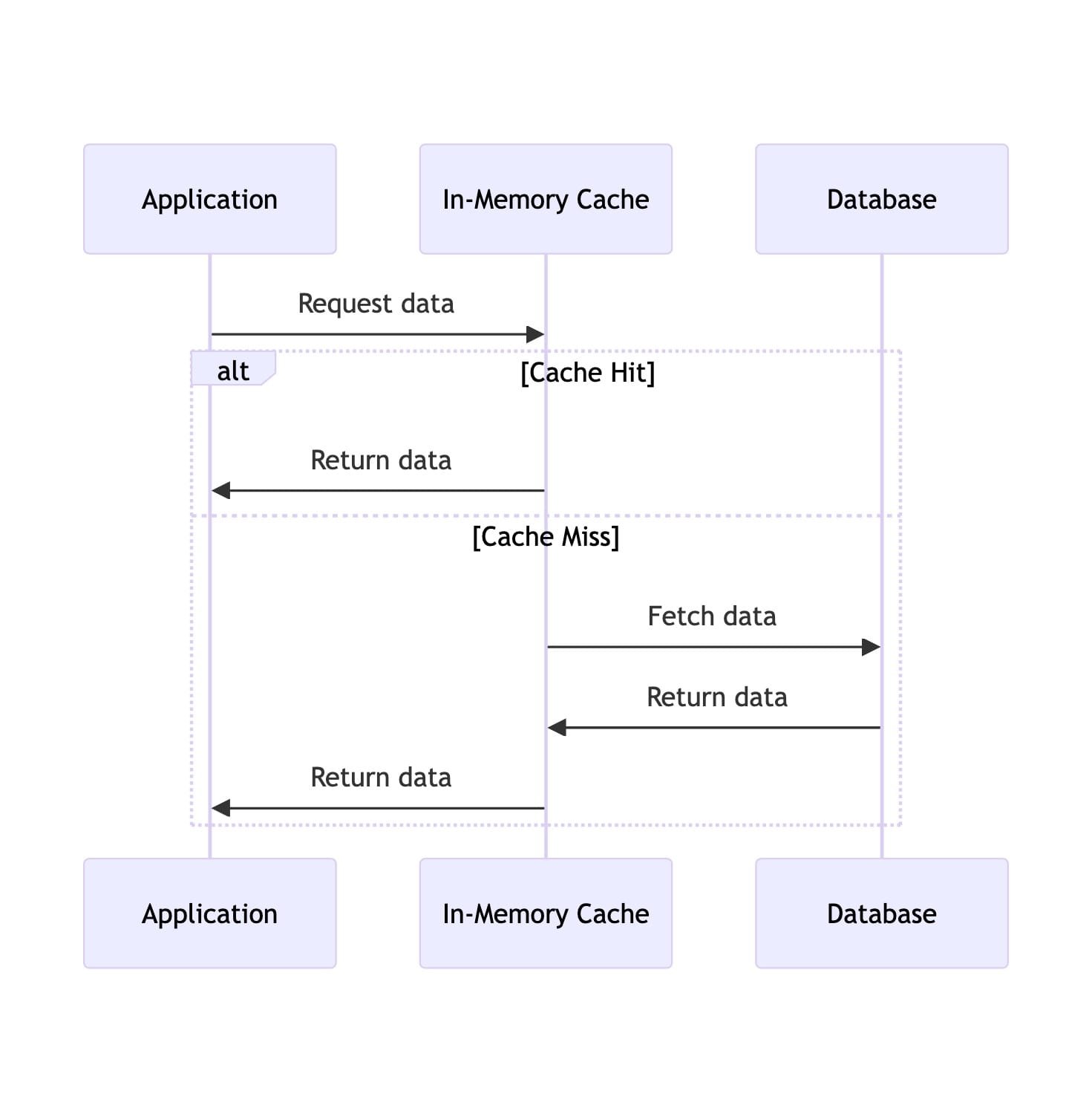

What is In-Memory Caching?

In-memory caching is a method where data is temporarily stored in the system’s primary memory (RAM). This approach significantly reduces data access time compared to traditional disk-based storage, leading to faster retrieval and improved application performance.

Key Features:Speed: Caching provides near-instant data access, crucial for high-performance applications.Temporary Storage: Data stored in a cache is ephemeral, and primarily used for frequently accessed data.Reduced Load on Primary Database: By storing frequently requested data, it reduces the number of queries to the main database.Common Use Cases:Web Application Performance: Improving response times in web services and applications.Real-Time Data Processing: Essential in scenarios like stock trading platforms where speed is critical.

Key Features:Speed: Caching provides near-instant data access, crucial for high-performance applications.Temporary Storage: Data stored in a cache is ephemeral, and primarily used for frequently accessed data.Reduced Load on Primary Database: By storing frequently requested data, it reduces the number of queries to the main database.Common Use Cases:Web Application Performance: Improving response times in web services and applications.Real-Time Data Processing: Essential in scenarios like stock trading platforms where speed is critical.

💡

In-Memory Caching: This is a method to store data temporarily in the system’s main memory (RAM) for rapid access. It’s primarily used to speed up data retrieval by avoiding the need to fetch data from slower storage systems like databases or disk files. Examples include Redis and Memcached when used as caches.

DevOps vs SRE vs Platform Engineering – Explained

At small companies, engineers often wear multiple hats, juggling a mix of responsibilities. Large companies have specialized teams with clearly defined roles in DevOps, SRE, and Platform Engineering.

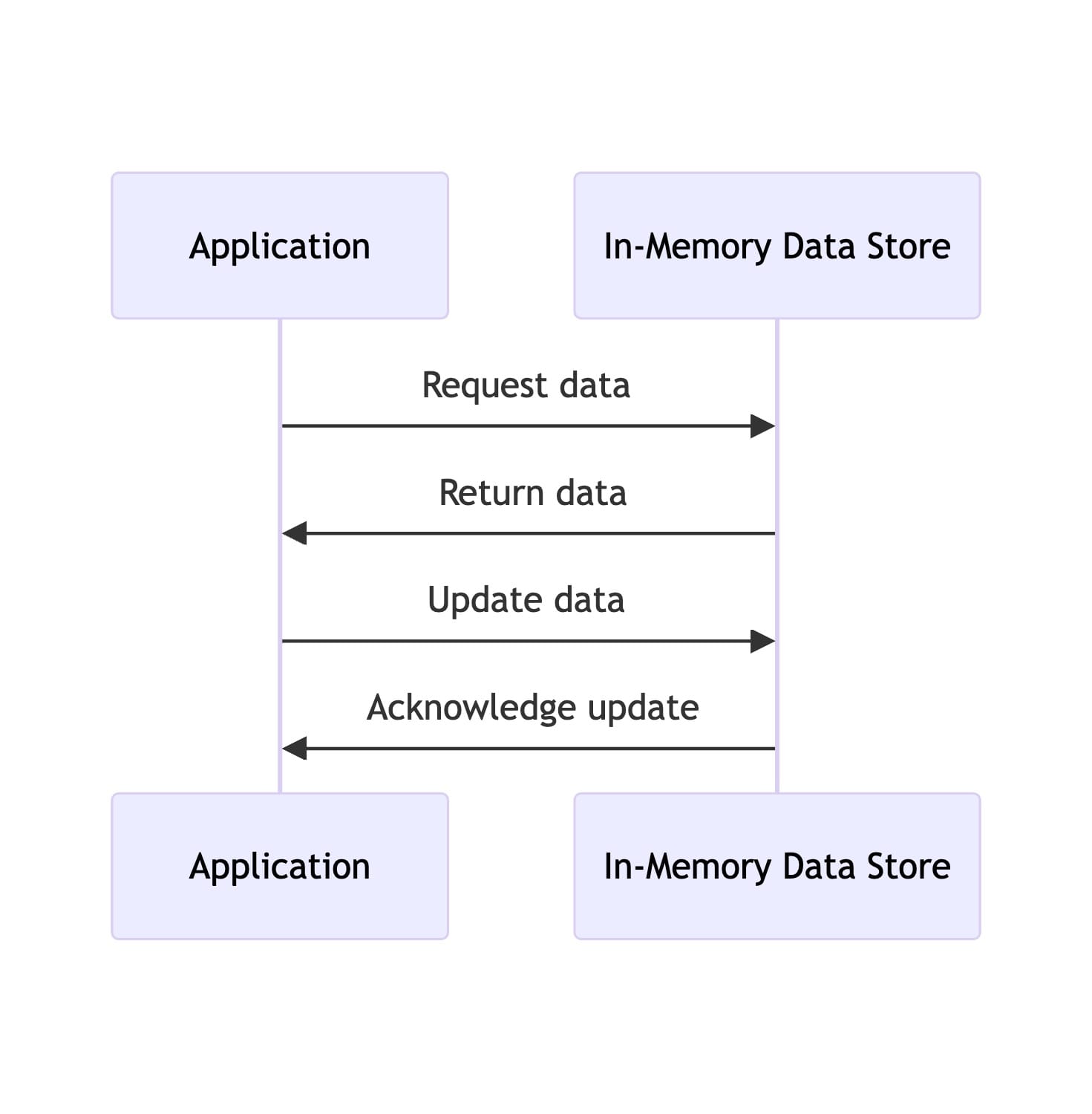

What is an In-Memory Data Store?

An In-Memory Data Store is a type of database management system that utilizes main memory for data storage, offering high throughput and low-latency data access.

Key Features:Persistence: Unlike caching, in-memory data stores can persist data, making them suitable as primary data storage solutions.High Throughput and Low Latency: Ideal for applications requiring rapid data processing and manipulation.Scalability: Easily scalable to manage large volumes of data.Common Use Cases:Real-Time Analytics: Used in scenarios requiring quick analysis of large datasets, like fraud detection systems.Session Storage: Maintaining user session information in web applications.

Key Features:Persistence: Unlike caching, in-memory data stores can persist data, making them suitable as primary data storage solutions.High Throughput and Low Latency: Ideal for applications requiring rapid data processing and manipulation.Scalability: Easily scalable to manage large volumes of data.Common Use Cases:Real-Time Analytics: Used in scenarios requiring quick analysis of large datasets, like fraud detection systems.Session Storage: Maintaining user session information in web applications.

💡

In-Memory Data Store: This refers to a data management system where the entire dataset is held in the main memory. It’s not just a cache but a primary data store, ensuring faster data processing and real-time access. Redis, when used as a primary database, is an example.

How Do (LLM) Large Language Models Work? Explained

A large language model (LLM) is an AI system trained on extensive text data, designed to produce human-like and intelligent responses.

Comparing In-Memory Caching and In-Memory Data Store

Aspect

In-Memory Caching

In-Memory Data Store

Purpose

Temporary data storage for quick access

Primary data storage for high-speed data processing

Data Persistence

Typically non-persistent

Persistent

Use Case

Reducing database load, improving response time

Real-time analytics, session storage, etc.

Scalability

Limited by memory size, often used alongside other storage solutions

Highly scalable, can handle large volumes of data

Advantages and LimitationsIn-Memory Caching

Advantages:

Reduces database load.Improves application response time.

Limitations:

Data volatility.Limited storage capacity.In-Memory Data Store

Advantages:

High-speed data access and processing.Data persistence.

Limitations:

Higher cost due to large RAM requirements.Complexity in data management and scaling.

Top 50+ AWS Services That You Should Know in 2023

Amazon Web Services (AWS) started back in 2006 with just a few basic services. Since then, it has grown into a massive cloud computing platform with over 200 services.

Choosing the Right Approach

The choice between in-memory caching and data store depends on specific application needs:

Performance vs. Persistence: Choose caching for improved performance in data retrieval and in-memory data stores for persistent, high-speed data processing.Cost vs. Complexity: In-memory caching is less costly but might not offer the complexity required for certain applications.Summary

To summarize, some key differences between in-memory caching and in-memory data stores:

Caches hold a subset of hot data, and in-memory stores hold the full dataset.Caches load data on demand, and in-memory stores load data upfront.Caches synchronize with the underlying database asynchronously, and in-memory stores sync writes directly.Caches can expire and evict data, leading to stale data. In-memory stores always have accurate data.Caches are suitable for performance optimization. In-memory stores allow new applications with real-time analytics.Caches lose data when restarted and have to repopulate. In-memory stores maintain data in memory persistently.Caches require less memory while in-memory stores require sufficient memory for the full dataset.

Top Container Orchestration Platforms: Kubernetes vs. Docker Swarm

Kubernetes and Docker Swarm are both open-source container orchestration platforms that automate container deployment, scaling, and management.

DevOps vs GitOps: Streamlining Development and Deployment

DevOps & GitOps both aim to enhance software delivery but how they differ in their methodologies and underlying principles?